Humans have pondered aliens since medieval times

For beings that are supposedly alien to human culture, extraterrestrials are pretty darn common. You can find them in all sorts of cultural contexts, from comic books, sci-fi novels and conspiracy theories to Hollywood films and old television reruns. There’s Superman and Doctor Who, E.T. and Mindy’s friend Mork, Mr. Spock, Alf, Kang and Kodos and My Favorite Martian. Of course, there’s just one hitch: They’re all fictional. So far, real aliens from other worlds have refused to show their faces on the real-world Earth — or even telephone, text or tweet. As the Italian physicist Enrico Fermi so quotably inquired during a discussion about aliens more than six decades ago, “Where is everybody?”

Scientific inquiry into the existence of extraterrestrial intelligence still often begins by pondering Fermi’s paradox: The universe is vast and old, so advanced civilizations should have matured enough by now to send emissaries to Earth. Yet none have. Fermi suspected that it wasn’t feasible or that aliens didn’t think visiting Earth was worth the trouble. Others concluded that they simply don’t exist. Recent investigations indicate that harsh environments may snuff out nascent life long before it evolves the intelligence necessary for sending messages or traveling through space.

In any event, Fermi’s question did not launch humankind’s concern with visitors from other planets. Imagining other worlds, and the possibility of intelligent life-forms inhabiting them, did not originate with modern science or in speculative fiction. In the ancient world, philosophers argued about the possibility of multiple universes; in the Middle Ages the question of the “plurality of worlds” and possible inhabitants occupied the deepest of thinkers, spawning intricate and controversial philosophical, theological and astronomical debate. Far from being a merely modern preoccupation, life beyond Earth has long been a burning issue animating the human race’s desire to understand itself, and its place in the cosmos.

Other worlds, illogical

From ancient times Earth’s place was widely regarded to be the center of everything. As articulated by the Greek philosopher Aristotle, the Earth was the innermost sphere in a universe, or world, surrounded by various other spheres containing the moon, sun, planets and stars. Those heavenly spheres, crystalline and transparent, rotated about the Earthly core comprising four elements: fire, air, water and earth. Those elements layered themselves on the basis of their essence, or “nature” — earth’s natural place was at the middle of the cosmos, which was why solid matter fell to the ground, seeking the inaccessible center far below.

On the basis of this principle, Aristotle deduced the impossibility of other worlds. If some other world existed, its matter (its “earth”) would seek both the center of its world and of our world as well. Such opposite imperatives posed a logical contradiction (which Aristotle, having more or less invented logic, regarded as a directly personal insult). He also applied further reasoning to point out that there is no space (no void) outside the known world for any other world to occupy. So, Aristotle concluded, two worlds cannot both exist.

Some Greeks (notably those advocating the existence of atoms) believed otherwise. But Aristotle’s view prevailed. By the 13th century, once Aristotle’s writings had been rediscovered in medieval Europe, most scholars defended his position.

But then religion leveled the philosophical playing field. Fans of other worlds got a chance to make their case.

In 1277, the bishop of Paris, Étienne Tempier, banned scholars from teaching 219 principles, manny associated with Aristotle’s philosophy. Among the prohibited teachings on the list was item 34: that God could not create as many worlds as he wanted to. Since the penalty for violating this decree was excommunication, Parisian scholars suddenly discovered rationales allowing multiple worlds, empowering God to defy Aristotle’s logic. And since Paris was the intellectual capital of the European world, scholars elsewhere followed the Parisian lead.

While several philosophers asserted that God could make many worlds, most intimated that he probably wouldn’t have bothered. Hardly anyone addressed the likelihood of alien life, although both Jean Buridan in Paris and William of Ockham in Oxford did consider the possibility. “God could produce an infinite [number of] individuals of the same kind as those that now exist,” wrote Ockham, “but He is not limited to producing them in this world.”

Populated worlds showed up more prominently in writings by the renegade thinkers Nicholas of Cusa (1401–1464) and Giordano Bruno (1548–1600). They argued not only for the existence of other worlds, but also for worlds inhabited by beings just like, or maybe better than, Earth’s humans.

“In every region inhabitants of diverse nobility of nature proceed from God,” wrote Nicholas, who argued that space had no center, and therefore the Earth could not be central or privileged with respect to life. Bruno, an Italian friar, asserted that God’s perfection demanded an infinity of worlds, and beings. “Infinite perfection is far better presented in innumerable individuals than in those which are numbered and finite,” Bruno averred.

Burned at the stake for heretical beliefs (though not, as often stated, for his belief in other worlds), Bruno did not live to see the triumph of Copernicanism during the 17th century. Copernicus had placed the sun at the hub of a planetary system, making the Earth just one planet of several. So the existence of “other worlds” eventually became no longer speculation, but astronomical fact, inviting the notion of otherworldly populations, as the prominent Dutch scientist Christiaan Huygens pointed out in the late 1600s. “A man that is of Copernicus’ opinion, that this Earth of ours is a planet … like the rest of the planets, cannot but sometimes think that it’s not improbable that the rest of the planets have … their inhabitants too,” Huygens wrote in his New Conjectures Concerning the Planetary Worlds, Their Inhabitants and Productions.

A few years earlier, French science popularizer Bernard le Bovier de Fontenelle had surveyed the prospects for life in the solar system in his Conversations on the Plurality of Worlds, an imaginary dialog between a philosopher and an uneducated but intelligent woman known as the Marquise.

“It would be very strange that the Earth was as populated as it is, and the other planets weren’t at all,” the philosopher told the Marquise. Although he didn’t think people could live on the sun (if there were any, they’d be blinded by its brightness), he sided with those who envisioned inhabitants on other planets and even the moon.

“Just as there have been and still are a prodigious number of men foolish enough to worship the Moon, there are people on the Moon who worship the Earth,” he wrote.

From early modern times onward, discussion of aliens was not confined to science and philosophy. They also appeared in various works of fiction, providing plot devices that remain popular to the present day. Often authors used aliens as stand-ins for evil (or occasionally benevolent) humans to comment on current events. Modern science fiction about aliens frequently portrays them in the role of tyrants or monsters or victims, with parallels to real life (think Flash Gordon’s nemesis Ming the Merciless, a 1930s dictator, or the extraterrestrials of the 1980s film and TV show Alien Nation — immigrants encountering bigotry and discrimination). When humans look for aliens, it seems, they often imagine themselves.

Serious science

While aliens thrived in fiction, though, serious scientific belief in extraterrestrials — at least nearby — diminished in the early 20th century, following late 19th century exuberance about possible life on Mars. Supposedly a network of lines interpreted as canals signified the presence of a sophisticated Martian civilization; its debunking (plus further knowledge about planetary environments) led to general agreement that finding intelligent life elsewhere in the solar system was not an intelligent bet.

On the other hand, the universe had grown incredibly vaster than the early Copernicans had imagined. The sun had become just one of billions of stars in the Milky Way galaxy, which in turn was only one of billions of other similar galaxies, or “island universes.” Within a cosmos so expansive, alien enthusiasts concluded, the existence of other life somewhere seemed inevitable. In 1961, astronomer Frank Drake developed an equation to gauge the likelihood of extraterrestrial life’s existence; by the 1990s he estimated that 10,000 planets possessed advanced civilizations in the Milky Way alone, even before anybody really knew for sure that planets outside the solar system actually existed.

But now everybody does. In the space of the last two decades, conclusive evidence of exoplanets, now numbering in the thousands, has reconfigured the debate and sharpened Fermi’s original paradox. No one any longer doubts that planets are plentiful. But still there’s been not a peep from anyone living on them, despite years of aiming radio telescopes at the heavens in hope of detecting a signal in the static of interstellar space.

Maybe such signals are just too rare or too weak for human instruments to detect. Or possibly some cosmic conspiracy is at work to prevent civilizations from communicating — or arising in the first place. Or perhaps civilizations that do arise are eradicated before they have a chance to communicate.

Or maybe the alien invasion has merely been delayed. Fermi’s paradox implicitly assumes that other civilizations have been around long enough to develop galactic transportation systems. After all, the universe, born in the Big Bang 13.8 billion years ago, is three times as old as the Earth. So most analyses assume that alien civilizations had a head start and would be advanced enough by now to go wherever they wanted to. But a new paper suggests that livable galactic neighborhoods may have developed only relatively recently.

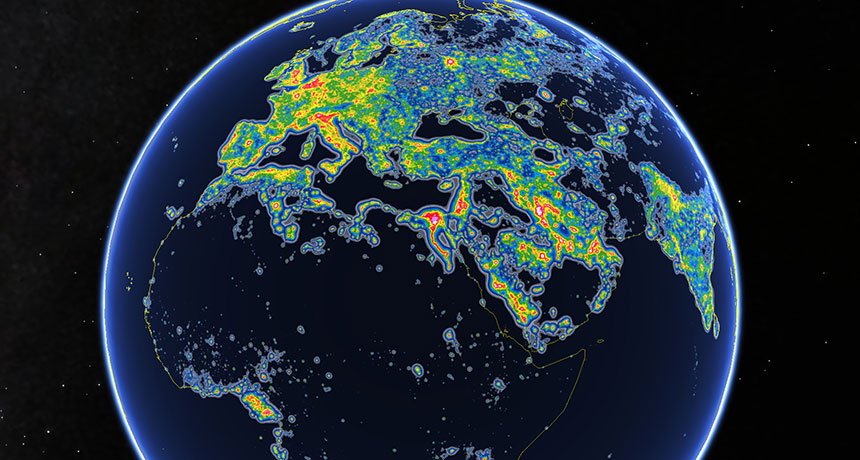

In a young, smaller and more crowded universe, cataclysmic explosions known as gamma-ray bursts may have effectively sterilized otherwise habitable planets, Tsvi Piran and collaborators suggest in a paper published in February in Physical Review Letters.

A planet near the core of a galaxy would be especially susceptible to gamma-ray catastrophes. And in a young universe, planets closer to the galactic edge (like Earth) would also be in danger from gamma-ray bursts in neighboring satellite galaxies. Only as the expansion of the universe began to accelerate — not so long before the birth of the Earth — would galaxies grow far enough apart to provide safety zones for life.

“The accelerated expansion induced by a cosmological constant slows the growth of cosmic structures, and increases the mean inter-galaxy separation,” Piran and colleagues write. “This reduces the number of nearby satellites likely to host catastrophic” gamma-ray bursts. So most alien civilizations would have begun to flourish not much before Earth’s did; those aliens may now be wondering why nobody has visited them.

Still, the radio silence from the sky makes some scientists wonder whether today’s optimism about ET’s existence will go the way of the Martian canal society. From one sobering perspective, aliens aren’t sending messages because few planets remain habitable long enough for life to develop an intelligent civilization. One study questions, for instance, how likely it is that life, once initiated on any planet, would shape its environment sufficiently well to provide for lasting bio-security.

In fact, that study finds, a wet, rocky planet just the right distance from a star — in the Goldilocks zone — might not remain habitable for long. Atmospheric and geochemical processes would typically drive either rapid warming (producing an uninhabitable planet like Venus) or quick cooling, freezing water and leaving the planet too cold and dry for life to survive, Aditya Chopra and Charles Lineweaver conclude in a recent issue of Astrobiology. Only if life itself alters these processes can it maintain a long-term home suitable for developing intelligence.

“Feedback between life and environment may play the dominant role in maintaining the habitability of the few rocky planets in which life has been able to evolve,” wrote Chopra and Lineweaver, both of the Australian National University in Canberra.

Yet even given such analyses — based on a vastly deeper grasp on astronomy and cosmology than medieval scholars possessed — whether real aliens exist remains one of those questions that science cannot now answer. It’s much like other profound questions also explored in medieval times: What is the universe made of? Is it eternal? Today’s scientists may be closer (or not) to answering those questions than were their medieval counterparts. Nevertheless the answers are not yet in hand.

Maybe we’ll just have to pose those questions to the aliens, if they exist, and are ever willing to communicate. And if those aliens do arrive, and provide the answers, humankind may well discover how medieval its understanding of the cosmos still is. Or perhaps the aliens will be equally clueless about nature’s deepest mysteries. As Fontenelle’s philosopher told the Marquise: “There’s no indication that we’re the only foolish species in the universe. Ignorance is quite naturally a widespread thing.”